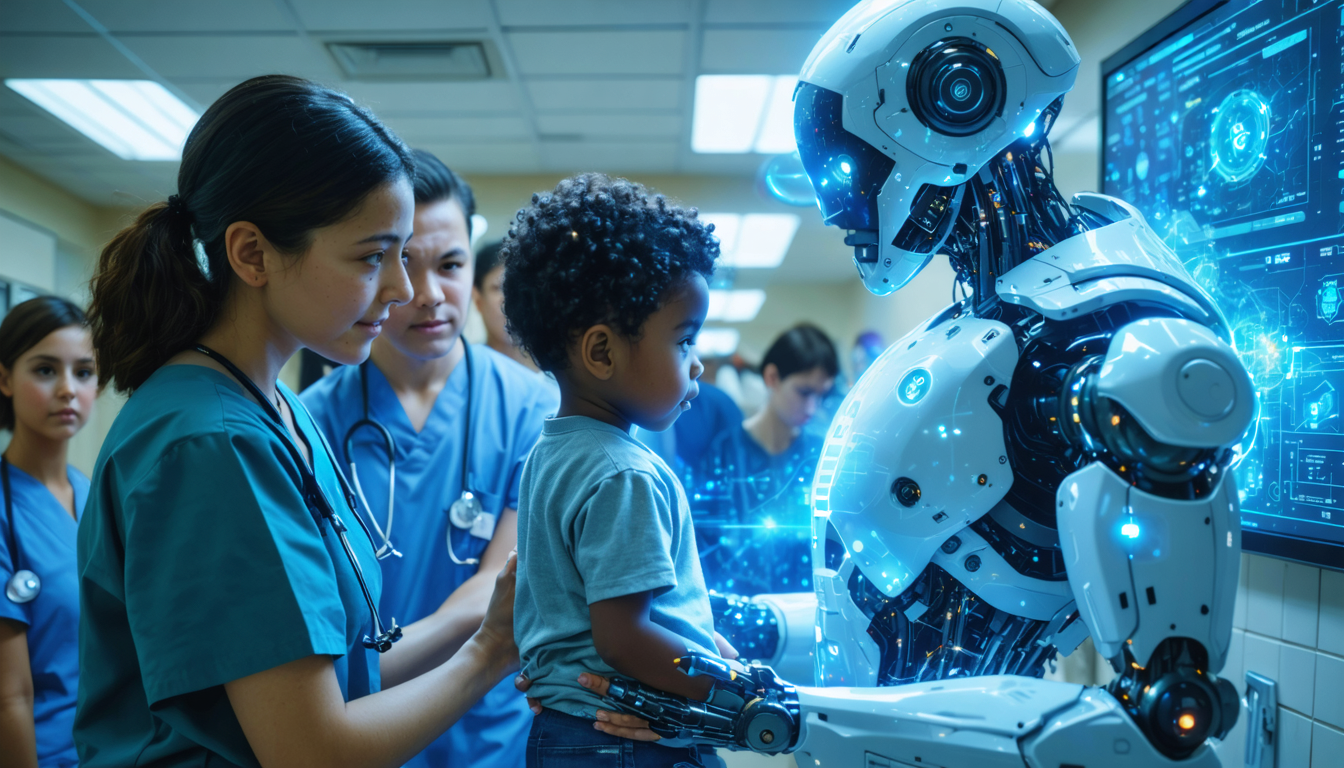

In a world where technology is playing an increasingly prominent role in our daily lives, artificial intelligence sometimes goes beyond mere mechanical assistance to become a true savior. In 2025, a moving story perfectly illustrates this unexpected role: ChatGPT, an AI known for its conversational capabilities, played a crucial part in rescuing a child. This artificial intelligence, through an urgent recommendation to the parents, precipitated a trip to the emergency room that proved vital. This adventure, resembling a dramatic medical series scenario, reveals the new perspectives offered by intelligent technologies in the medical sector.

The symptoms of little Nyo, 6 years old, initially seemed harmless. Recurring nausea, unusual fatigue, and headaches did not suggest any underlying severity. However, the sudden appearance of strabismus and double vision changed the situation drastically. Faced with the uncertainty of traditional medical diagnoses, the parents decided to seek ChatGPT’s advice. This approach paved the way for a quick and life-saving hospital intervention, thus reducing the imminent risk that their doctors had not been able to identify.

- 1 How ChatGPT turned parental worry into a true emergency rescue

- 2 Decoding the crucial diagnosis: how ChatGPT detected a life-threatening emergency

- 3 The gradual integration of artificial intelligence in the medicine of tomorrow

- 4 ChatGPT, a virtual “Dr. House” at your fingertips: opportunities and limits

- 5 A look at ethical and security challenges of emergency AI recommendations

- 6 The role of technology in saving children: between hope and vigilance

- 7 How parents can safely use ChatGPT for their children’s health

- 8 ChatGPT and the security revolution in pediatric emergencies in 2025

How ChatGPT turned parental worry into a true emergency rescue

Nyo’s story is symptomatic of artificial intelligence advances in healthcare. Faced with symptoms that might have seemed usual but were worsening, turning to ChatGPT enabled a rapid analysis based on a huge, regularly updated medical corpus. Unlike the generic answers often expected from chatbots, ChatGPT identified a severe health emergency. It recommended the parents act immediately, urging them to go to the hospital without delay.

This responsiveness is remarkable, as the traditional medical diagnosis had stumbled over several unsuccessful consultations. The chatbot, by cross-referencing the precise symptoms provided by the parents, was able to suggest the hypothesis of neurological complications requiring urgent intervention. This ability to guide is all the more impressive as it is based solely on a conversational exchange, proving that the power of algorithms can complement human expertise where it meets limits or uncertainties.

Nyo’s case highlights one of the major strengths of this technology: transforming health data into concrete and adapted recommendations in a context where every minute counts. It is not a substitute for the doctor, but a valuable ally in rapid decision-making.

Decoding the crucial diagnosis: how ChatGPT detected a life-threatening emergency

After following ChatGPT’s recommendation, Nyo’s parents took their son to the emergency room. The seriousness of the situation was quickly confirmed: an excessive build-up of cerebrospinal fluid caused by a brain tumor was creating intracranial pressure threatening the child’s life. This discovery forced surgeons to intervene immediately to place a drain and perform a complex nine-hour operation.

This diagnosis, the result of a succession of subsequent medical analyses, had escaped until then the various consulted specialists. The speed with which ChatGPT had prompted hospitalization undoubtedly prevented a tragedy. According to doctors, any further delay could have been fatal.

This case is a spectacular manifestation of an artificial intelligence capable of rapidly synthesizing vast clinical information and alerting to a medical emergency. It also suggests that, in certain circumstances, this artificial intelligence can serve as an additional safety net when a human diagnosis takes time to clarify.

The tumor diagnosis illustrates the complementarity between artificial intelligence and human medicine: AI does not replace clinical examination nor medical responsibility, but it significantly enriches the healthcare ecosystem.

The gradual integration of artificial intelligence in the medicine of tomorrow

Over the years, AI has established itself in the medical field, notably for image analysis, patient record management, and proposing differential diagnoses. Nyo’s story echoes other cases where ChatGPT helped identify rare pathologies.

For example, the case of Alex, a child suffering from unexplained pain for three years, is reviewed. His mother, desperate, used ChatGPT to copy-paste MRI results, which led to a hypothesis of tethered cord syndrome later confirmed by a neurosurgeon. These stories testify to an evolution where artificial intelligence becomes a kind of reference capable of processing a mass of medical data inaccessible to a single professional.

This convergence nevertheless raises ethical and technical questions, notably concerning data security, reliability of recommendations, and the doctor’s role facing a virtual assistant. However, the trend is clear: with the continuing development of algorithms, tools like ChatGPT could become indispensable partners in medical care.

This dynamic encourages healthcare actors to consider a responsible, supervised, and well-framed integration of artificial intelligences into the care chain.

ChatGPT, a virtual “Dr. House” at your fingertips: opportunities and limits

The image of Dr. House, a brilliant but atypical doctor, perfectly fits the growing use of ChatGPT in diagnostic support. This AI can suggest rare or unexpected leads by analyzing symptoms that neither a first-intention diagnosis nor several specialists would have deciphered.

Yet, this resemblance must be approached with caution. Unlike the fictional character who acts on instinct and a certain cynicism, ChatGPT relies on probabilities and databases. This means that erroneous, incomplete, or biased data can lead to an inaccurate diagnosis. Experts therefore emphasize that it should never be used as a substitute for medical examination or professional advice.

The advantages of this technology are numerous:

- Rapid analysis of complex data: simultaneous processing of a large volume of symptoms and records.

- Patient guidance: decision support to consult or go urgently.

- Detection of weak signals: identification of often unknown rare pathologies.

- Accessibility: availability 24/7, without geographic constraints.

However, caution remains necessary to avoid erroneous diagnoses and risks associated with self-medication based on a simple reading of algorithmic suggestions.

A look at ethical and security challenges of emergency AI recommendations

With the increasing audience and medical uses of ChatGPT, several issues related to security and personal data protection emerge. These tools collect sensitive information that must be rigorously protected to safeguard patients’ privacy.

Moreover, emergency recommendations may raise increased responsibility. Who is liable if an analysis error occurs? What margin of error is acceptable when AI directs towards hospitalization?

These questions determine the need for a legal and regulatory framework adapted to health technologies. In 2025, several protocols are beginning to emerge, ensuring algorithm certification and mandatory human control that regulate this digital interaction.

For patients and families, it is essential to approach ChatGPT as a tool, complementary and not exclusive, to human medical competence. Dialogue with a professional remains indispensable to confirm or refute an algorithmic analysis.

Comparative table: Advantages and risks of medical recommendations by artificial intelligence

| Criteria | Advantages | Risks |

|---|---|---|

| Speed | Immediate response for critical situations | Overdiagnosis or excessive panic |

| Accessibility | 24/7 availability, without geographic constraints | Excessive dependence and loss of personal medical judgment |

| Analysis | Processing of large complex databases | Misinterpretation if data is incomplete or biased |

| Data security | Progress in confidentiality and anonymization | Risks of leaks or malicious uses |

| Liability | Support for human decision-making | Legal gray areas in case of medical error |

The role of technology in saving children: between hope and vigilance

Stories like those of Nyo and Alex demonstrate that artificial intelligence can become a true hero for families facing complex or unexpected medical situations. This technology offers additional hope when conventional medicine reaches its limits.

Yet, this hope must not obscure the risks. Overtrust in algorithms could lead to precious time losses, even tragic errors. Therefore, families are encouraged to understand well that these tools are aids to decision-making, not definitive verdicts.

Technology does not replace dialogue and medical oversight, but it can sometimes point the way to follow in contexts where urgency is imperative.

Thus, Nyo’s rescue is much more than an anecdote: it is a concrete example of successful AI integration into the healthcare system, a powerful testimony to the benefits that technology can bring when used wisely.

How parents can safely use ChatGPT for their children’s health

In moments of worry, it is tempting for parents to seek immediate answers on the Internet, or from artificial intelligences like ChatGPT. For this use to be beneficial and not dangerous, several best practices must be followed:

- Provide precise descriptions: a detailed list of symptoms with context is essential for reliable analysis.

- Never replace medical advice: ChatGPT can guide, but only a professional is authorized to make a diagnosis.

- Use ChatGPT as a complement: integrate its recommendations into the existing medical process.

- Stay vigilant: in case of any worsening or suspicion, always prioritize hospital emergencies.

- Protect personal data: avoid disclosing too much sensitive information during exchanges.

The thoughtful appropriation of this tool by families can enhance safety and early care for children at risk.

List of practical tips for using ChatGPT in child healthcare

- Note all symptoms without omitting details

- Rephrase questions to clarify your concern

- Keep a history of conversations

- Use recommendations to discuss with your doctor

- Act promptly in case of emergency

ChatGPT and the security revolution in pediatric emergencies in 2025

With the increase in cases where artificial intelligence acts as a key trigger in pediatric emergencies, hospitals are now integrating AI tools into their reception protocols. Some hospitals equip their emergency departments with computerized systems that analyze patient data in real time and alert on high-risk cases.

This technological revolution improves the responsiveness of medical teams, reduces initial diagnostic errors, and optimizes therapeutic choices, thus ensuring better safety for children.

In this context, ChatGPT and other artificial intelligences become essential partners, capable of advising families from the first signs and preparing emergencies for a rapid and appropriate intervention.

This new medical paradigm, centered on collaboration between AI and professionals, puts security and patient health at the heart of innovations.

Can ChatGPT replace a doctor in an emergency?

No, ChatGPT is a decision support tool and can never replace a medical diagnosis by a professional.

How to ensure data security when using ChatGPT?

It is important not to disclose sensitive personal information and to respect the confidentiality rules related to the platform used.

What steps should be taken if ChatGPT recommends an emergency?

Parents must immediately go to the emergency room and inform the doctors that this recommendation comes from an artificial intelligence.

Can AI detect all rare diseases?

No, although AI has a large database, some rare diseases may escape its analysis. It remains a complement and not a substitute.

What are the risks of AI-based self-medication?

Self-medication without medical advice can lead to serious errors; it is crucial to consult a specialist for any treatment.