In the current context marked by a rapid and widespread adoption of artificial intelligence in French companies, the benefits are undeniable: improved productivity, accelerated digital transformation, and automation of numerous tasks. Yet, this development comes with significant risks, sometimes unknown or underestimated, that can weaken security, confidentiality, and even the sustainability of organizations. The Directorate General for Internal Security (DGSI) recently published an Interference Flash highlighting these vulnerabilities through three real cases experienced by French companies, revealing the dangers that threaten when the AI tools used are not fully controlled.

These examples expose various facets of technological and organizational challenges: on one hand, the manipulation of sensitive data via uncontrolled external tools; on the other hand, excessive trust placed in autonomous decision-making systems; and finally, the rise of sophisticated attacks using deepfake technologies. This article delves into these three concrete illustrations to deepen the major issues faced by French companies in their exploration and use of artificial intelligence, addressing ethical risks, algorithmic biases, data protection issues, and IT security imperatives. It will also assess how appropriate AI regulation, combined with rigorous governance, can prevent costly errors and preserve the integrity of national economic structures.

- 1 Inappropriate use of generative AI: an alarming example for French companies

- 2 Dangers of dependence on AI for strategic decision-making in French companies

- 3 Sophisticated deepfake scams, a growing threat for French companies

- 4 Key recommendations to frame the use of artificial intelligence in French companies

- 5 Statistics and economic impacts of artificial intelligence in French companies

- 6 Ethical risks and algorithmic biases: challenges for French companies facing artificial intelligence

- 7 Digital transformation and data protection challenges in French companies

- 8 Strategic approach for successful AI integration in French companies

Inappropriate use of generative AI: an alarming example for French companies

The first case highlighted by the DGSI concerns a strategic company employing a publicly available generative artificial intelligence tool, developed abroad, for the translation of sensitive internal documents. The use of this tool had been spontaneously adopted by certain employees, attracted by the efficiency and speed it offered in daily content management. This practice, initiated without hierarchical validation or prior control, quickly revealed its limits when the IT management detected a potential leak during an internal audit.

The use of free or international services presents a particular danger, as the data entered may be used to train artificial intelligence models in the background, often without the user being explicitly informed. This phenomenon poses a major threat to the confidentiality of information, especially when the servers of these companies are located outside the French or even European territory, exposing this data to foreign laws, sometimes with less strict regulations.

Faced with this observation, the company ceased using this service in favor of an internal and paid solution, offering a secure environment compliant with national standards. Moreover, a specific working group was set up to develop a strict usage doctrine for AI tools. This approach illustrates the crucial need for companies to structure the use of advanced technologies to avoid security breaches. Indeed, threats linked to interconnection with external interfaces or uncontrolled plugins increase the attack vector and vulnerability to infiltrations.

Looking at this first example as a whole, it becomes clear that the temptation to resort to simple and free tools may hide considerable risks. For all French companies engaged in digital transformation, it is imperative to adopt a proactive IT security policy and integrate data protection into their AI tool evaluation criteria from the initial phases.

Dangers of dependence on AI for strategic decision-making in French companies

The second case emphasized by intelligence services refers to a scenario where a French company in full international expansion outsourced a critical part of its decision-making process, due diligence, to an artificial intelligence system designed abroad. This approach aimed to gain speed and efficiency in evaluating potential commercial partners in unfamiliar markets.

In the short term, this automation proved attractive as it generated detailed and instant reports. However, due to the workload and lack of specialized human resources to verify the results, teams tend to blindly accept the provided outputs without resorting to additional expert validation. Yet, these algorithmic systems provide probabilistic answers, corresponding to the statistically most probable response rather than a bias-free or perfectly context-adapted answer.

Algorithmic biases, introduced either during the model design or through training data, can misdirect results. Moreover, the well-known “hallucinations” – producing false, imprecise, or fabricated information – endanger the reliability of these tools. This phenomenon heightens the risk of strategic decisions based on biased analyses, leading to commercial losses, contractual conflicts, or a breach of trust.

The example thus shows the crucial need to integrate expert human control within decision loops, for instance by appointing AI referents responsible for reformulating, verifying, and weighting the recommendations generated by these machines. Human vigilance remains an indispensable safeguard against the reduction of thought diversity and automated decisions disconnected from the real context of French companies.

This case also illustrates another major technological challenge: how to combine the optimization brought by automation and digital transformation with maintaining rigorous strategic oversight? Training programs and awareness of the inherent risks of artificial intelligence use become essential to preserve agility and resilience of companies in a competitive international market.

Sophisticated deepfake scams, a growing threat for French companies

The third case mentioned by the DGSI reveals a particularly sophisticated scam attempt involving the use of deepfake technologies. A manager at an industrial site received a video call from an interlocutor perfectly imitating the appearance and voice of the group’s leader, requesting a financial transfer as part of an acquisition project. This deception was only detected thanks to the vigilance and instinct of the manager, who quickly interrupted the communication and alerted management.

Audio and video manipulation technologies have indeed become strikingly convincing. Between 2023 and 2025, the volume of deepfake content on the web multiplied more than tenfold, and several million of these videos, images, or recordings circulate daily. This development has undergone a spectacular acceleration also due to the popularization of generative AI tools and speech synthesis techniques.

These attacks often rely on meticulous collection and analysis of public and private data of a company to personalize fraudulent messages, thereby enhancing their credibility and manipulative potential. Nearly half of companies claim they have been targeted by such attempts, revealing the scale of the problem in the French landscape. The industrial sector, due to its significant economic stakes, is particularly exposed.

It thus appears essential for French companies to deploy appropriate IT security measures: training employees to recognize deepfakes, implementing strict verification protocols during financial solicitations, and using technological solutions capable of detecting falsified content. These measures must absolutely accompany digital transformation to prevent these innovations from becoming levers of fraud and disinformation.

Key recommendations to frame the use of artificial intelligence in French companies

The DGSI’s feedback emphasizes the need for rigorous oversight to avoid identified dangers while promoting the adoption of artificial intelligence and its many benefits. The first imperative is the clear definition of an internal policy dedicated to regulating uses and types of data that may be processed via AI tools.

French companies are encouraged to favor local or European solutions to limit risks related to data sovereignty and legal protection. Furthermore, continuous employee training appears as another fundamental pillar, enabling them to understand the stakes, identify risks, and adopt behaviors compliant with rules.

The DGSI also recommends establishing internal transparency mechanisms, notably through a reporting channel for any suspicious incident, and instituting regular reviews of practices associated with artificial intelligence. The integration of specialized human validations must be systematic, especially for strategic or sensitive decisions.

Finally, collaboration between public and private stakeholders, including AI regulatory authorities, is essential to define a clear and evolving framework capable of accompanying technological changes without compromising ethical values and the security of French companies.

- Define a clear internal policy on AI use

- Prefer local or European solutions

- Regularly train employees

- Establish an incident reporting channel

- Ensure systematic human validation for critical decisions

- Ensure transparency and regular practice reviews

- Collaborate with authorities for an adapted regulatory framework

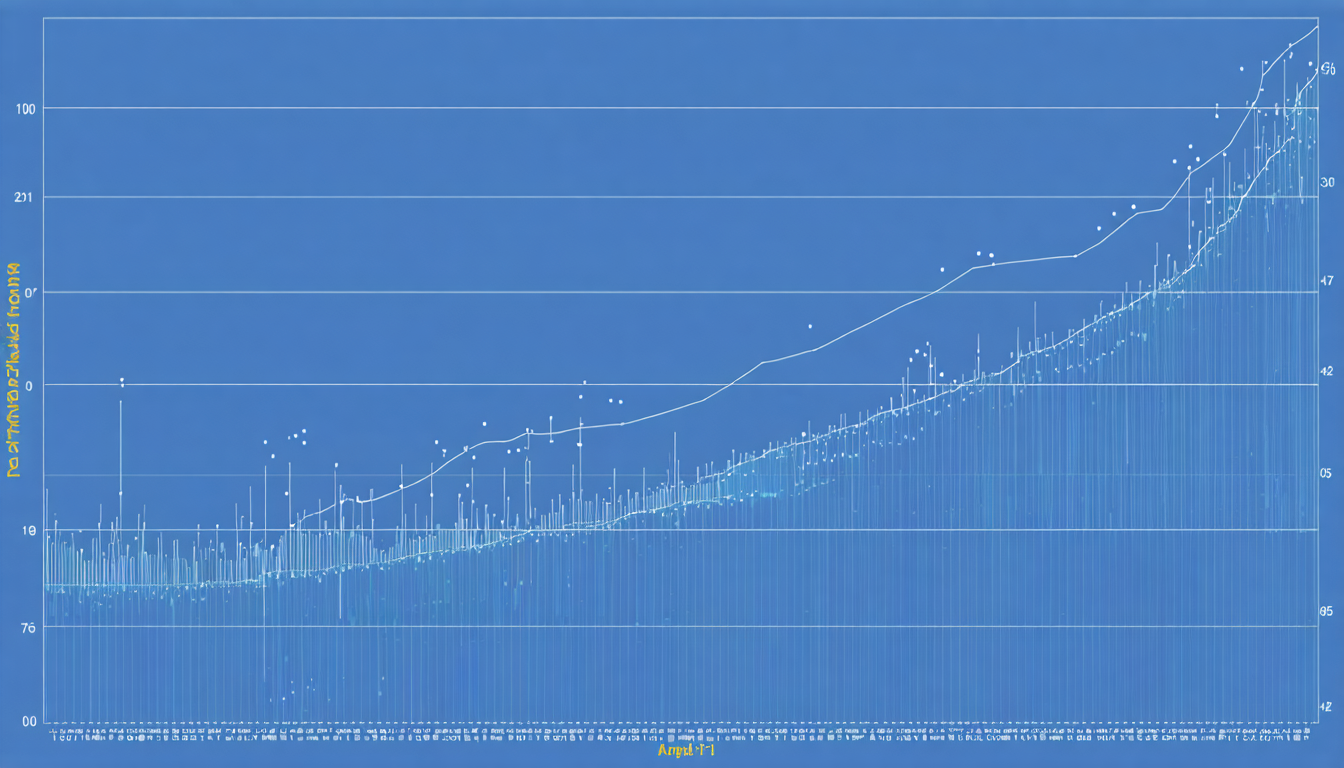

Statistics and economic impacts of artificial intelligence in French companies

The figures reveal a mixed panorama between economic benefits and potential vulnerabilities. Nearly 78% of global companies already integrate artificial intelligence in at least one of their functions. In France, this trend is steadily increasing, notably thanks to digital transformation driven in many sectors.

According to several studies, productivity gains linked to AI can reach peaks of up to 55%. The average financial return is estimated at around 3.70 dollars generated per dollar invested. These economic prospects attract leaders but sometimes mask intrinsic risks, especially in terms of IT security and privacy compliance.

This table summarizes some key indicators:

| Indicator | Value | Comments |

|---|---|---|

| AI adoption rate in companies (France) | 70% | Strong increase since 2023 |

| Productivity gains linked to AI | Up to 55% | Variability depending on sector and use |

| Average financial return | 3.70 $ per dollar invested | Significant advantage over traditional investments |

| Companies without formal AI policy | 1/3 | Lack of governance exposing to risks |

| Companies targeted by deepfakes | 46% | Rapid spread of this threat |

Ethical risks and algorithmic biases: challenges for French companies facing artificial intelligence

Beyond security and data protection issues, French companies face a fundamental challenge linked to algorithmic biases. These biases arise when data used to train models are partially representative or when implicit prejudices enter the programs. This can lead to discrimination, distort analysis results, or compromise automated decision-making.

Ethical risks related to these biases are crucial, especially for companies working in sensitive sectors such as finance, health, or human resources. For example, a biased AI may unjustly influence recruitment decisions, penalize certain customer profiles, or favor questionable commercial choices.

To address these issues, companies must implement regular algorithm audits, carry out ethical compliance tests, and reinforce diversity in development teams to limit blind spots. Raising awareness among decision-makers about these risks and integrating corrective mechanisms is also essential.

Finally, European AI regulation now imposes strict requirements regarding bias evaluation and transparency, obliging French companies to comply with these standards under penalty of legal sanctions and negative impact on their image.

Practical measures to reduce algorithmic biases

- Conduct independent audits on used models

- Involve multidisciplinary experts in algorithm development

- Use representative and diverse datasets

- Implement mechanisms for explainability of algorithmic decisions

- Train teams on ethical risks related to AI

Digital transformation and data protection challenges in French companies

Digital transformation, accelerated by intensive use of artificial intelligence, requires strengthening data protection policies. In this context, French companies must comply not only with European GDPR standards but also anticipate specific requirements related to the confidentiality and integrity of data processed by AI systems.

Handling sensitive data via automated interfaces can increase the risks of leaks and misuse. Consequently, a reinforced cybersecurity policy must combine the use of technical tools and rigorous governance, including defining clear roles and accountability of actors.

Data security also involves controlling external flows. French companies are encouraged to limit exchanges with uncertified external platforms or those located outside the European Union, favoring sovereign solutions that guarantee enhanced data control. This approach helps maintain the trust of clients and partners, a key factor in consolidating long-term business relationships.

Strategic approach for successful AI integration in French companies

Finally, to maximize chances of success in digital transformation and minimize risks, French companies must adopt a global and integrated strategic approach. This mobilizes not only artificial intelligence technologies but also team training, business process revision, as well as the implementation of security and compliance management.

Adapted AI governance includes appointing dedicated leaders, establishing ethical charters, and close collaboration with regulatory authorities. This framework must also encourage innovation while ensuring responsible and transparent use.

The story of these three companies illustrates that innovation does not come without vigilance. Developing internal skills, raising risk awareness, and transparency on authorized practices ensure a balance between exploiting AI’s potential and managing inherent dangers.