In the era of artificial intelligence, machine visual perception has never been more advanced. Meta, a giant in digital technologies, has just reached a major milestone with its SAM3 and SAM3D models, which redefine image recognition and computer vision. These new systems integrate advanced deep learning and image processing techniques, pushing the boundaries of what is possible in visual analysis. More than just simple object segmentation, it is an ability to understand, isolate, and even reconstruct in three dimensions the elements of a scene that opens vast horizons.

In 2025, these technologies position themselves as revolutionary tools, capable of transforming the digital and physical world. Their superior performance in identifying objects from a simple textual description, their ability to maintain visual continuity in complex video sequences, and their 3D reconstruction capacity from images make SAM3 and SAM3D major innovations. These models, trained on a base of over 11 million images, perfectly illustrate the spectacular potential at the intersection of artificial intelligence and computer vision.

This technological shift will impact not only researchers and developers but also the creative, commercial, and scientific industries. As image processing becomes more intuitive, efficient, and precise, the rise of advanced AI models like SAM3 and SAM3D promises to reinvent fields as diverse as surveillance, online commerce, robotics, and biodiversity conservation. Discover a detailed overview of these innovations and their concrete implications in image recognition.

- 1 How Meta SAM3 revolutionizes image recognition through intelligent segmentation

- 2 SAM3D: the three-dimensional vision technology that transforms a simple photo into a 3D object

- 3 Detailed key features of Meta SAM3 and SAM3D for advanced computer vision

- 4 Development supported by a gigantic Data Engine and its impact on accuracy

- 5 Concrete applications of Meta SAM3 / SAM3D in industry, science, and leisure

- 6 Current constraints and limitations of Meta SAM3 / SAM3D to consider

- 7 Comparison between Meta SAM3 / SAM3D and other AI image recognition technologies

- 8 Accessibility, costs, and usage models of Meta SAM3 / SAM3D in the current technological landscape

How Meta SAM3 revolutionizes image recognition through intelligent segmentation

The Meta SAM3 model establishes itself as a true breakthrough in the field of image recognition by artificial intelligence. Unlike previous generations, this system does not just analyze isolated pixels: it segments with exceptional precision each object present in an image or video. This segmentation is achieved from a simple click or even a textual description given by the user, which radically simplifies human-machine interaction.

One of SAM3’s strengths lies in its ability to operate without specific prior training. In other words, the model can instantly isolate an object never encountered before, regardless of its shape or characteristics. For example, an amateur photographer wishing to extract all the red bicycles from an urban scene only needs to write the description “all red bicycles.” SAM3 will identify and trace the contours of the corresponding objects without requiring painstaking intervention or specific data preparation. This feature, called Promptable Concept Segmentation (PCS), blends natural language understanding with the visual power of deep learning, a combination made possible by the sophisticated architecture developed by Meta.

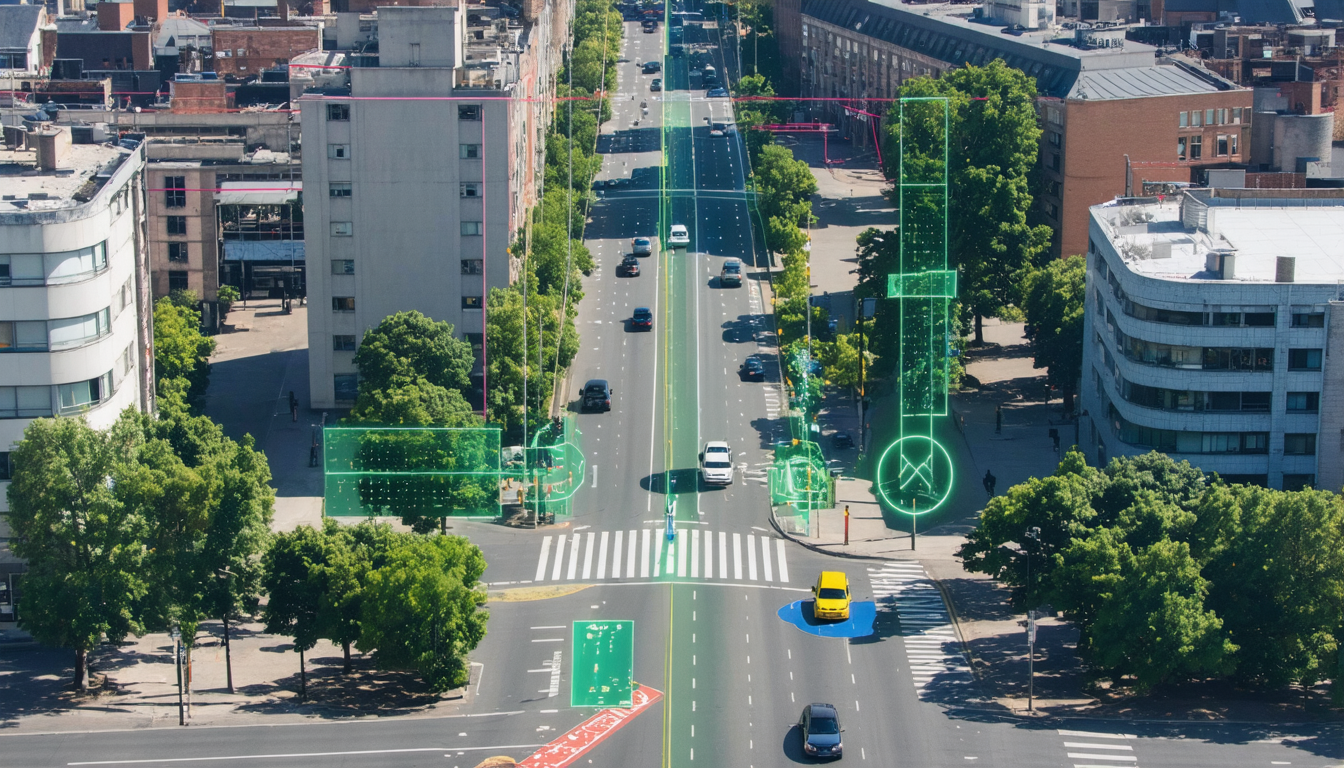

Intelligent segmentation doesn’t stop at still images: video streams also benefit from this advanced image processing. SAM3 uses “masklets” to precisely track objects, even when they are partially occluded or moving in dynamic scenes. For example, in a surveillance video, if a person moves behind an obstacle, the model continues to maintain tracking without confusion. This capability guarantees reliable temporal tracking and paves the way for applications like automated video surveillance or creative instant video editing tools.

The unified architecture of SAM3 is also distinguished by its unique backbone which processes images and videos uniformly. This internal organization reduces resource consumption and increases execution speed, providing optimal performance even in demanding contexts. Thanks to this engineering, SAM3 transforms image recognition into a smooth and accessible experience, emblematic of the advances in artificial intelligence profoundly marking computer vision in 2025.

SAM3D: the three-dimensional vision technology that transforms a simple photo into a 3D object

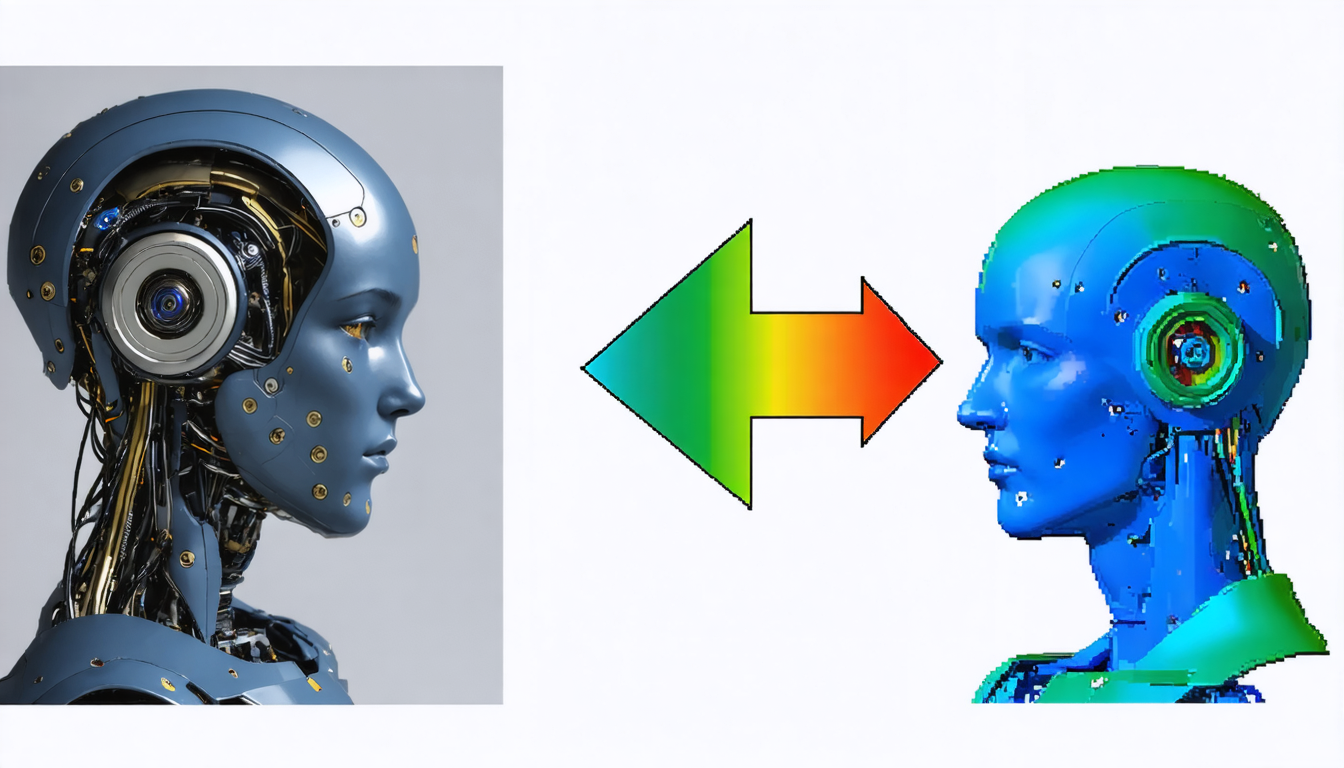

Beyond classic segmentation, Meta innovates with SAM3D, a model that elevates image recognition to a three-dimensional spatial level. Using reference data from multi-camera computer vision and LiDAR scans, SAM3D manages to reconstruct objects in 3D from ordinary images, opening a new era in automatic visual interpretation.

This technology relies on two specialized modules. SAM 3D Objects targets everyday inanimate objects. From a simple photo, it generates a manipulable textured mesh in three dimensions, capable of handling partial occlusions. Thus, if an object is partially hidden behind another, SAM3D can predict the missing shape through intense logical and contextual reasoning. For example, in an image where a cup partially hides a vase, the container will be reconstructed in its entirety, an achievement that far exceeds the capabilities of traditional models.

For living beings, especially humans, SAM 3D Body deploys an unprecedented model of body representation. It distinguishes between the skeleton, flesh, and even clothing, allowing fine analysis of poses and complex movements. This innovation brings unparalleled fluidity and naturalness to digital avatars and opens the door to applications in virtual reality, animation, or ergonomics.

SAM3D thus constitutes a major advancement in image processing as it transforms not only perception but also object modeling. This immersion in the third dimension opens the debate on the possibility of introducing a real physical “sense” to images, serving as a foundation for more natural interactions between humans and machines.

Detailed key features of Meta SAM3 and SAM3D for advanced computer vision

Meta SAM3 and SAM3D are not just about segmentation and object reconstruction: these models introduce a set of innovative features that disrupt the standard approach to deep learning applied to image recognition.

The flagship feature, Promptable Concept Segmentation (PCS), enables precise segmentation of concepts expressed in natural language. This capability merges linguistic understanding with real-time visual interpretation. This alliance offers a double advantage: on the one hand, it removes the need for costly manual annotations and, on the other, it increases accessibility to AI tools for a wide audience, whether expert or novice.

Among other remarkable features, worth mentioning are:

- Intelligent temporal tracking in videos, which uses masklets to maintain object identity even in case of occlusion

- Management of partial occlusions in 3D, allowing coherent reconstruction of hidden objects

- “Zero-Shot” generalization capability, offering recognition of never-before-seen objects during training

- A validation mechanism through presence token, avoiding interpretation errors and limiting visual hallucinations

- A unified architecture optimized for fast execution on still images and video streams

These advanced technologies directly multiply the possibilities of real-world uses in numerous fields, facilitating creation, automation, and precise analysis of complex visual elements.

Development supported by a gigantic Data Engine and its impact on accuracy

The power of Meta SAM3 / SAM3D also relies on the immense database on which the models were trained. More than 11 million annotated images were used to equip the AI with a fine and diverse understanding of the visual world.

This massive base is complemented by the SA-Co benchmark, a scientific collection including nearly four million distinct annotated concepts. This richness allows the AI to grasp the subtlest nuances between similar objects, such as distinguishing a “front wheel” from a “rear wheel” on a vehicle. An example that testifies to the model’s sophistication and its ability to refine visual analysis in context.

The annotation process relies on an innovative hybrid work loop: the AI performs automatic pre-annotation accelerating human work, then experts validate and correct in real time. This rapid process, about five times more efficient than traditional manual annotation, has enabled the creation of a colossal and extremely high-quality Data Engine.

This methodical approach guarantees Meta SAM3 / SAM3D significant robustness and reliability, minimizing errors and the “hallucinations” that plagued previous systems. The result is a system both powerful and precise that promises to durably transform large-scale image processing.

Concrete applications of Meta SAM3 / SAM3D in industry, science, and leisure

The advanced capabilities of SAM3 and SAM3D models quickly attract various sectors, generating numerous innovative applications.

In online commerce, Facebook Marketplace already exploits SAM3 via the “View in Room” function. This feature allows sellers to instantly convert a product photo, such as a chair, into a virtual 3D object so that the buyer can visualize it directly in their interior via augmented reality. An immersive experience that disrupts remote selling codes and significantly improves conversion rates.

Content creators on Instagram benefit from intelligent editing tools developed from SAM3. These automate complex commands, such as “blur the background” or “turn the sky black and white,” executed in a fraction of a second. Thus, visual creation becomes more intuitive and accessible, without requiring advanced technical skills.

In natural sciences, Conservation X Labs uses SAM3 to analyze immense volumes of images and videos captured by camera traps. Automatically identifying rare or endangered species greatly facilitates ecological monitoring and biodiversity protection.

Finally, robotics benefits from improved perception via SAM3D, essential for the precise manipulation of objects in complex environments. Robots can calculate the optimal grasp point, navigate easily in cluttered spaces, and respond to voice commands to interact with unknown objects, an advancement redefining modern robotics.

Current constraints and limitations of Meta SAM3 / SAM3D to consider

Despite its rapid advancement in image recognition by artificial intelligence, the SAM3 / SAM3D duo is not free from challenges and technical constraints.

For example, the quality of the generated 3D textures remains sometimes moderate. Fine details such as hair, fine meshes, or some transparent objects may appear blurry or simplified at model output. This deficiency limits the use of these objects in productions requiring very high cinematic resolution, such as AAA films or games.

Another major challenge lies in the real physical understanding of environments. The model “sees” shapes but does not understand material properties such as gravity, solidity, or collision. Thus, it happens that reconstructed 3D objects pass through or intersect each other without respecting physical laws, a limitation to be compensated by manual post-production intervention.

Finally, visual hallucinations, although reduced thanks to the presence token, persist in complex and cluttered scenes where objects look very similar. Video tracking can sometimes fail, requiring human control to avoid critical errors, especially in surveillance or medical fields.

The high consumption of video memory (VRAM) to run these models also limits their deployment on mobile devices. Currently, a powerful computing environment and a robust internet connection are essential, slowing the rise of autonomous embedded uses.

Comparison between Meta SAM3 / SAM3D and other AI image recognition technologies

In a booming sector, many alternatives exist compared to Meta models, offering sometimes more specialized or complementary solutions.

| Technology | Specificity | Strengths | Limitations |

|---|---|---|---|

| Meta SAM3 / SAM3D | Advanced segmentation and 3D reconstruction | Versatility, Zero-Shot, unified video-image integration | High consumption, limited 3D textures |

| Google DeepMind Gemini 3 | Integral multimodality and logical reasoning | Excellence in complex document analysis | Less efficient in pure 3D geometry |

| OpenAI Sora 2 | Generation and understanding of dynamic video | Creation of realistic physical scenes | Less suited for segmentation |

| YOLO | Fast object detection and counting | Extremely lightweight and fast | Less precise, no 3D reconstruction |

| MedSAM | Specialized in medical imaging | Medical certification, high precision | Not versatile, very targeted use |

This diversity supports the emergence of a rich offer adapted to all uses, where the generalization of Meta SAM3 / SAM3D is accompanied by specialized solutions with specific profiles.

Accessibility, costs, and usage models of Meta SAM3 / SAM3D in the current technological landscape

Meta has chosen an ambitious open policy for its SAM3 and SAM3D models. The weights of these models are free and accessible for research, notably via popular platforms like Hugging Face. This strategy aims to impose an open technological standard in the field of image recognition and AI-based image processing.

However, real-time execution requires powerful infrastructure, especially to achieve processing in less than 30 milliseconds per image. The presence of high-performance graphic processors like the H200 is essential, limiting availability to professionals and specialized data centers.

For general users, access often passes through the cloud and web interfaces like the Segment Anything Playground, allowing free testing of these tools. Nevertheless, to fully integrate these models into commercial products, one must comply with specific and often costly licenses called “SAM License,” which regulate the use and protect Meta’s intellectual property.

In summary, the technology is widely democratized for research but its industrialization requires significant investments in hardware and legal contracts. This duality between free code and usage cost is a common reality in the sector of cutting-edge revolutionary technologies.

What is the Meta SAM3 model?

Meta SAM3 is an artificial intelligence model capable of automatically and quickly segmenting any object present in an image or video from a simple textual description or a click.

How does SAM3D transform images into 3D objects?

SAM3D uses data from multi-camera vision and LiDAR scans to reconstruct objects in three dimensions from a single photo, generating manipulable textured meshes.

What are the advantages of conceptual segmentation (PCS)?

Conceptual segmentation allows the user to request the model to precisely isolate objects according to natural language descriptions, without requiring specific training, making the tools accessible and powerful.

Which sectors benefit the most from Meta SAM3 / SAM3D?

Sectors such as online commerce, content creation, robotics, video surveillance, and biodiversity conservation actively exploit these technologies to improve their processes.

Are SAM3 / SAM3D freely accessible?

The models are freely accessible for research via open platforms, but commercial use often requires specific licenses and costly computing infrastructure.